Intro

This post has two motivations:

- Demonstrate using ChatGPT’s specialized

Data Analystmodel to explore a problem starting with the initial problem statement, showing dialog, generated code (often much modified by me). This section is quite long, but I’d be interested in your thoughts about the options it suggests in each step along the way. - In the results, note possible overfitting (in this current anlaysis), or other basic challenges to producing statistically significant results given the small sample size in the original study. If you’re mostly interested in this, search for the Overfitting? heading below.

ChatGPT dialog

Me:

I have several gene count files and several transcript count files that are the output of an rnaseq pipeline, specifically Salmon. In the gene count files, there’s a gene ID column, a gene name column which can be ignored, and a column for each biological sample taken from oysters. The samples were taken from thermally-resilient families, from non-resilient families, and on day 0 and day 30 of a 30-day thermal stress regime for each of these families. I have a metadata csv file that represents which sample has each of these characteristics.

I’d like to explore whether there are genes that are statistically significantly associated with the thermal resilience, and further whether these genes if they exist, or other genes are present differently on day 0 and day 30 of the experiment. Can you recommend methods?

ChatGPT:

This is an exciting biological question, and exploring RNA-seq data for differentially expressed genes (DEGs) in this context is a well-established approach. Here’s a general roadmap for analyzing your data to address your questions:

1. Preparing the Data

-

Combine Gene Counts: Combine your gene count files into a single matrix, where rows represent genes and columns represent samples.

-

Metadata Matching: Ensure that your combined count matrix aligns with your metadata file (sample names should match exactly).

-

Filter Low Counts: Remove genes with consistently low counts across all samples to reduce noise.

2. Exploratory Data Analysis (EDA)

-

QC Metrics: Check for outliers using principal component analysis (PCA) or hierarchical clustering.

-

Normalization: Apply normalization methods to account for library size and compositional differences (e.g., using

DESeq2oredgeR). -

PCA/Clustering: Perform PCA or clustering to observe grouping patterns between thermal-resilient vs. non-resilient families and days 0 vs. 30.

3. Differential Gene Expression Analysis Use statistical methods to identify genes associated with thermal resilience and temporal changes. Here are the key steps:

- Set Up Your Model

- Use a tool like

DESeq2oredgeRto analyze differential expression. Define factors and contrasts:-

Thermal Resilience: Compare resilient vs. non-resilient families.

-

Day 0 vs. Day 30: Test for temporal changes within each family type.

-

Interaction Effect: Test whether changes from day 0 to day 30 differ between resilient and non-resilient families.

-

- Use a tool like

- Statistical Tests

-

Adjust for multiple testing using methods like Benjamini-Hochberg (FDR correction).

-

Look for genes with significant adjusted p-values and log2 fold-changes.

-

4. Gene Set Enrichment Analysis (GSEA)

-

Annotate genes with biological pathways (e.g., GO terms, KEGG pathways).

-

Perform enrichment analysis to identify pathways or functions enriched in DEGs.

5. Validation and Interpretation

- Visualize key findings:

-

Heatmaps of DEGs.

-

Volcano plots for significant genes.

-

Temporal profiles of specific genes of interest.

-

- Validate results with external data or literature.

Recommended Tools

- Statistical Analysis:

-

DESeq2(R package) for differential gene expression. -

edgeRorlimma(R packages) as alternatives.

-

- Visualization:

-

ggplot2for custom plots. -

ComplexHeatmap for heatmaps.

-

- Gene Annotation:

-

Bioconductor packages like

org.*.dbfor annotation. -

clusterProfilerfor pathway analysis.

-

- Pathway Analysis:

- GSEA tools (e.g., Broad Institute’s GSEA software or

fgseaR package).

- GSEA tools (e.g., Broad Institute’s GSEA software or

Extensions for Your Questions

- Thermal Resilience Genes:

- Compare resilient vs. non-resilient families, regardless of time point.

- Temporal Dynamics:

- Compare day 0 vs. day 30, for both resilient and non-resilient families.

- Test for interaction effects to identify genes where changes depend on resilience status.

- Integrative Analysis:

- Investigate whether genes associated with resilience also show temporal dynamics.

- Use clustering to group DEGs with similar patterns over time or between groups.

Let me know if you’d like help implementing any part of this analysis or if you need guidance on specific tools!

Me:

Yes, let’s walk through the entire process. How long do you think it will take?

ChatGPT:

The time required to walk through the entire analysis depends on your dataset’s size, your familiarity with tools like R or Python, and the computational resources available. Here’s a breakdown of the steps and their approximate time requirements:

1. Preparing the Data (1–2 hours)

-

Combining Gene Counts: If the data is well-structured, merging files can take 30–60 minutes.

-

Metadata Matching: Ensure metadata and count matrix alignment (~15–30 minutes).

-

Filtering Low Counts: Depending on the tool (e.g., filtering by counts-per-million), this takes ~15 minutes.

2. Exploratory Data Analysis (EDA) (1–2 hours)

- QC and Normalization:

- Normalizing the data (e.g., using

DESeq2) may take ~30–60 minutes depending on data size.

- Normalizing the data (e.g., using

- PCA/Clustering: Generating PCA plots and heatmaps will take ~30–60 minutes.

3. Differential Gene Expression Analysis (3–5 hours)

- Setting Up Models:

- Preparing the design matrix and contrasts in

DESeq2oredgeRmay take ~1 hour.

- Preparing the design matrix and contrasts in

- Running the Analysis:

- Differential expression computation for multiple contrasts (e.g., thermal resilience, day 0 vs. 30) can take 1–2 hours, depending on data size.

- Adjusting for Multiple Testing: Done automatically by tools like

DESeq2; takes a few minutes.

4. Gene Set Enrichment Analysis (2–4 hours)

- Annotation:

- Annotating gene IDs with GO terms, KEGG pathways, or other resources may take ~1–2 hours, depending on data size and tool availability.

- Pathway Analysis:

- Running enrichment analysis (e.g., GSEA or pathway overrepresentation) can take 1–2 hours.

5. Validation and Visualization (2–3 hours)

- Visualizations:

- Generating volcano plots, heatmaps, or temporal profiles may take ~1–2 hours, depending on the number of plots.

- Validation:

- Reviewing external literature and interpreting findings could take ~1 hour or longer.

6. Reporting and Interpretation (2–4 hours)

- Summarizing results into a report with key figures and biological interpretations could take a few hours.

Overall Estimate For a full analysis, 10–18 hours of focused work is a reasonable estimate, provided that data is already prepared and tools are installed. If unfamiliar with specific tools, it may take longer to troubleshoot or understand the workflows.

Next Steps To proceed, let’s:

-

Confirm that your data (gene counts and metadata) is ready for analysis.

-

Decide on the tools you want to use (

Ror Python). -

Walk step-by-step through the process, starting with data preparation.

Let me know how you’d like to proceed!

Me:

OK, let’s go.

ChatGPT:

Great! Let’s dive in, step by step. We’ll start with data preparation . Here’s what we’ll do:

Step 1: Data Preparation

- Gene Count Files :

- Combine individual gene count files into a single matrix, with rows as genes and columns as samples.

- Ensure that only the gene ID and count data are retained (ignore gene names).

- Metadata :

- Check that the metadata CSV file matches the sample names in the combined count matrix.

- Add any needed columns or correct inconsistencies in the metadata (e.g., resilience status, day).

- Filtering Low Counts :

- Remove genes with consistently low expression (e.g., genes with fewer than 10 reads across all samples).

What I Need from You

- Gene Count Files :

- Provide the individual gene count files or a combined file if it’s already available.

- Metadata File :

- Provide the metadata CSV file.

Once I have these files, I’ll:

-

Load them.

-

Combine and clean the data.

-

Show you an initial summary to confirm everything is in order before moving forward.

You can upload the files here, and we’ll proceed!

Me:

salmon.merged.gene_counts_2023_seqera.tsv Filedeseq metadata

| sample | thermal.tolerance | family | day |

|---|---|---|---|

| SRX5644308 | susceptible | 500 | 0 |

| SRX5644309 | susceptible | 500 | 0 |

| SRX5644323 | susceptible | 500 | 0 |

| SRX5644314 | susceptible | 500 | 30 |

| SRX5644315 | susceptible | 500 | 30 |

| SRX5644316 | susceptible | 500 | 30 |

Not all of the samples are included in the metadata file, so please only work with those that are.

ChatGPT:

Analyzed

import pandas as pd

# Load the gene counts and metadata files

gene_counts_path = '/mnt/data/salmon.merged.gene_counts_2023_seqera.tsv'

metadata_path = '/mnt/data/deseq_metadata2.csv'

# Read the gene counts

gene_counts = pd.read_csv(gene_counts_path, sep='\t', index_col=0)

# Read the metadata

metadata = pd.read_csv(metadata_path)

# Ensure only samples present in the metadata are kept in the gene counts

samples_in_metadata = metadata['sample'].tolist()

filtered_gene_counts = gene_counts.loc[:, gene_counts.columns.intersection(samples_in_metadata)]

# Display a summary of the processed data

filtered_gene_counts_summary = filtered_gene_counts.describe()

metadata_summary = metadata.describe()

filtered_gene_counts.shape, metadata.shape

The dataset has been filtered and aligned with the metadata. Here’s a summary:

- Gene Count Matrix:

- Rows (genes): 33,695

- Columns (samples): 24 (matches the 24 samples listed in the metadata).

- Metadata:

- Contains 24 samples and 4 columns of information. Let me know if you’d like to inspect the filtered data or if we should proceed to filtering out low-expressed genes.

Me:

Show metadata details for verification.

ChatGPT:

Analyzed

# Display metadata details for user verification

metadata.head()

Here are the first few rows of the metadata for verification:

-

sample: The sample name (e.g.,

SRX5644308). -

thermal.tolerance: Indicates thermal resilience status (

susceptibleor other categories). -

family: Represents the family group (e.g.,

500). -

day: Experimental day (

0or30). Let me know if this looks correct or if there are adjustments needed before proceeding.

Me:

Filter low-expression genes for analysis.

ChatGPT:

Analyzed

# Define a threshold for low expression: genes with fewer than 10 counts across all samples

low_expression_threshold = 10

# Sum counts across all samples for each gene

gene_sums = filtered_gene_counts.sum(axis=1)

# Filter genes where the total count is greater than the threshold

filtered_gene_counts_high_expression = filtered_gene_counts[gene_sums > low_expression_threshold]

# Display the shape of the filtered gene counts for verification

filtered_gene_counts_high_expression.shape

After filtering low-expression genes, the dataset now contains:

-

Genes: 28,013 (filtered from the original 33,695).

-

Samples: 24 (unchanged). This removes genes with fewer than 10 total counts across all samples. Let me know if you’d like to proceed with exploratory data analysis (EDA) or inspect this filtered dataset further.

Me:

proceed with exploratory data analysis. In the PCA plot, indicate which samples have which characteristics as indicated by the metadata file.

ChatGPT:

Exploratory Data Analysis (EDA)

EDA will help us understand the dataset’s structure and verify its quality. Here’s what we’ll do:

1. Summary Statistics We’ll calculate overall statistics for the expression data.

2. Normalization Normalization is essential for RNA-seq data to account for differences in library sizes and sequencing depth.

3. PCA (Principal Component Analysis) PCA will help us visualize sample clustering and identify patterns or outliers.

4. Heatmap of Top Genes A heatmap of the top varying genes across samples will provide insights into sample relationships.

Step 1: Summary Statistics Let me summarize the normalized and unnormalized data first. Analyzed

# Summary statistics for the raw (unnormalized) gene count data

raw_summary = filtered_gene_counts_high_expression.describe()

# Calculate library sizes (total counts per sample)

library_sizes = filtered_gene_counts_high_expression.sum(axis=0)

# Display key statistics

raw_summary, library_sizes

Here’s a summary of the data:

- Gene Count Statistics Across Samples (Raw Data):

- Mean counts per sample: Varies across samples, ranging roughly from ~170 to ~430.

- Maximum counts: Large variance in expression levels across genes, with some genes highly expressed (>100,000 counts).

- Library Sizes:

- Total counts per sample range from ~4.7 million to ~12.1 million, indicating variability in sequencing depth.

Next, we’ll normalize the data to address these differences in library sizes. Then, we’ll proceed to PCA to explore the sample relationships. Let’s normalize the data!

# Map metadata to samples for thermal tolerance and day

sample_metadata = metadata.set_index('sample')

color_map = {'resistant': 'blue', 'susceptible': 'red'}

colors = [color_map[sample_metadata.loc[sample, 'thermal.tolerance']] for sample in filtered_gene_counts_high_expression.columns]

days = sample_metadata.loc[filtered_gene_counts_high_expression.columns, 'day'].tolist()

# Create PCA scatter plot with thermal tolerance color coding and day labels

plt.figure(figsize=(10, 8))

for i, sample in enumerate(filtered_gene_counts_high_expression.columns):

plt.scatter(pca_results[i, 0], pca_results[i, 1], color=colors[i], s=50, alpha=0.7)

plt.text(pca_results[i, 0], pca_results[i, 1], f"Day {days[i]}", fontsize=8, ha='right')

plt.title("PCA of Samples (Colored by Thermal Tolerance)")

plt.xlabel(f"PC1 ({pca.explained_variance_ratio_[0]*100:.1f}%)")

plt.ylabel(f"PC2 ({pca.explained_variance_ratio_[1]*100:.1f}%)")

plt.grid(True)

# Add legend

legend_handles = [

plt.Line2D([0], [0], marker='o', color='w', markerfacecolor='blue', markersize=10, label='Resistant'),

plt.Line2D([0], [0], marker='o', color='w', markerfacecolor='red', markersize=10, label='Susceptible')

]

plt.legend(handles=legend_handles, title="Thermal Tolerance")

plt.show()

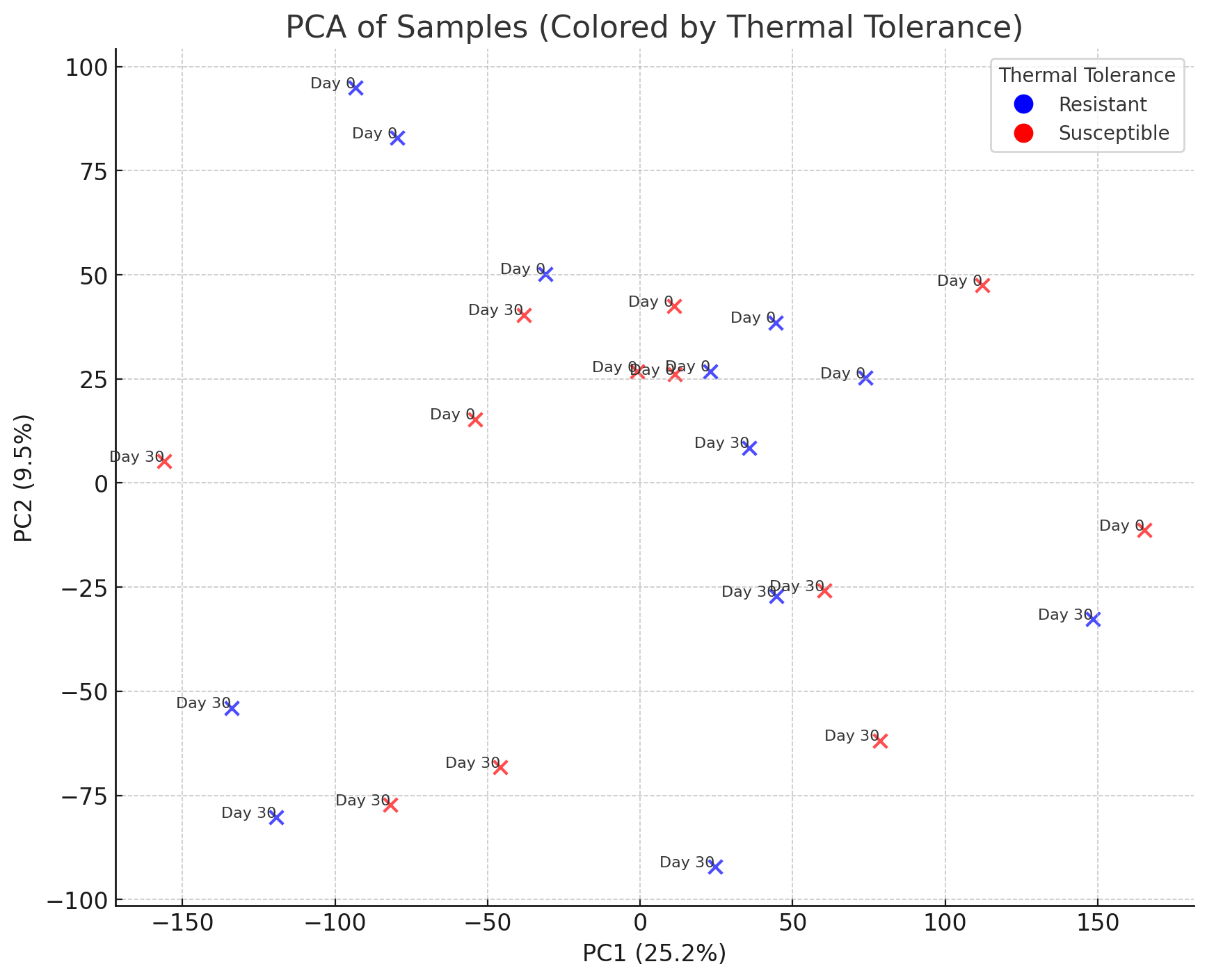

PCA of Samples (Top 2 PCs) The PCA plot is now complete. Here’s what it shows:

The PCA plot is now complete. Here’s what it shows:

-

Principal Component 1 (PC1) explains 25.2% of the variance.

-

Principal Component 2 (PC2) explains 9.5% of the variance.

- Color-coded by thermal tolerance:

-

Blue: Resistant samples.

-

Red: Susceptible samples.

-

- Day labels: Indicate the experimental day (

0or30). This visualization allows us to see if thermal tolerance or temporal changes (Day 0 vs. Day 30) drive clustering. Let me know if you’d like to refine this further or move on to differential gene expression analysis.

The samples are plotted based on their clustering along these two components. Samples with similar gene expression profiles are closer together. If you observe distinct groupings, these could correlate with biological variables (e.g., resilience or timepoint). Let me know if you’d like to proceed with further visualizations or move on to differential gene expression analysis.

Would you like me to perform a statistical test (e.g., ANOVA on PCA scores) to assess whether these patterns are significant, or proceed to identifying differentially expressed genes?

Me:

Because I’m looking for genes that are correlated with thermal resilience, would it make sense to try to find those at this point?

ChatGPT:

Yes, focusing on identifying genes correlated with thermal resilience is a logical next step. To do this, we can perform differential expression analysis to pinpoint genes whose expression levels differ significantly between the two thermal tolerance groups (resistant vs. susceptible ).

Steps to Identify Resilience-Associated Genes

- Set Up the Analysis:

- Define the resilience status (

resistantvs.susceptible) as the primary factor.

- Define the resilience status (

- Use the metadata to assign group labels to samples.

- Differential Expression Analysis:

- Compare gene expression between the two groups.

- Use statistical tools such as

DESeq2(R) or Python equivalents (statsmodels,scipy) to compute:-

Log fold change (effect size).

-

Adjusted p-values (e.g., using Benjamini-Hochberg correction for multiple testing).

-

- Filter Significant Genes:

-

Identify genes with significant p-values and meaningful log fold changes (e.g., log2FC > 1).

-

- Visualize Results:

- Volcano Plot: Highlight significant genes.

- Heatmap: Display expression levels of top differentially expressed genes.

Would you like to proceed with this workflow? If so, I’ll set up the analysis using the filtered data and metadata.

Me:

Visualize top varying genes with heatmap.

ChatGPT:

Analyzed

import seaborn as sns

# Calculate variance for each gene across all samples

gene_variances = log_cpm.var(axis=1)

# Select the top 50 most variable genes

top_genes = gene_variances.nlargest(50).index

# Subset the log-transformed CPM data for these top genes

top_genes_data = log_cpm.loc[top_genes]

# Create a heatmap of the top varying genes

plt.figure(figsize=(12, 10))

sns.heatmap(

top_genes_data,

cmap="viridis",

yticklabels=False,

xticklabels=filtered_gene_counts_high_expression.columns,

cbar_kws={"label": "Log2(CPM + 1)"}

)

plt.title("Heatmap of Top 50 Most Variable Genes Across Samples")

plt.xlabel("Samples")

plt.ylabel("Genes")

plt.show()

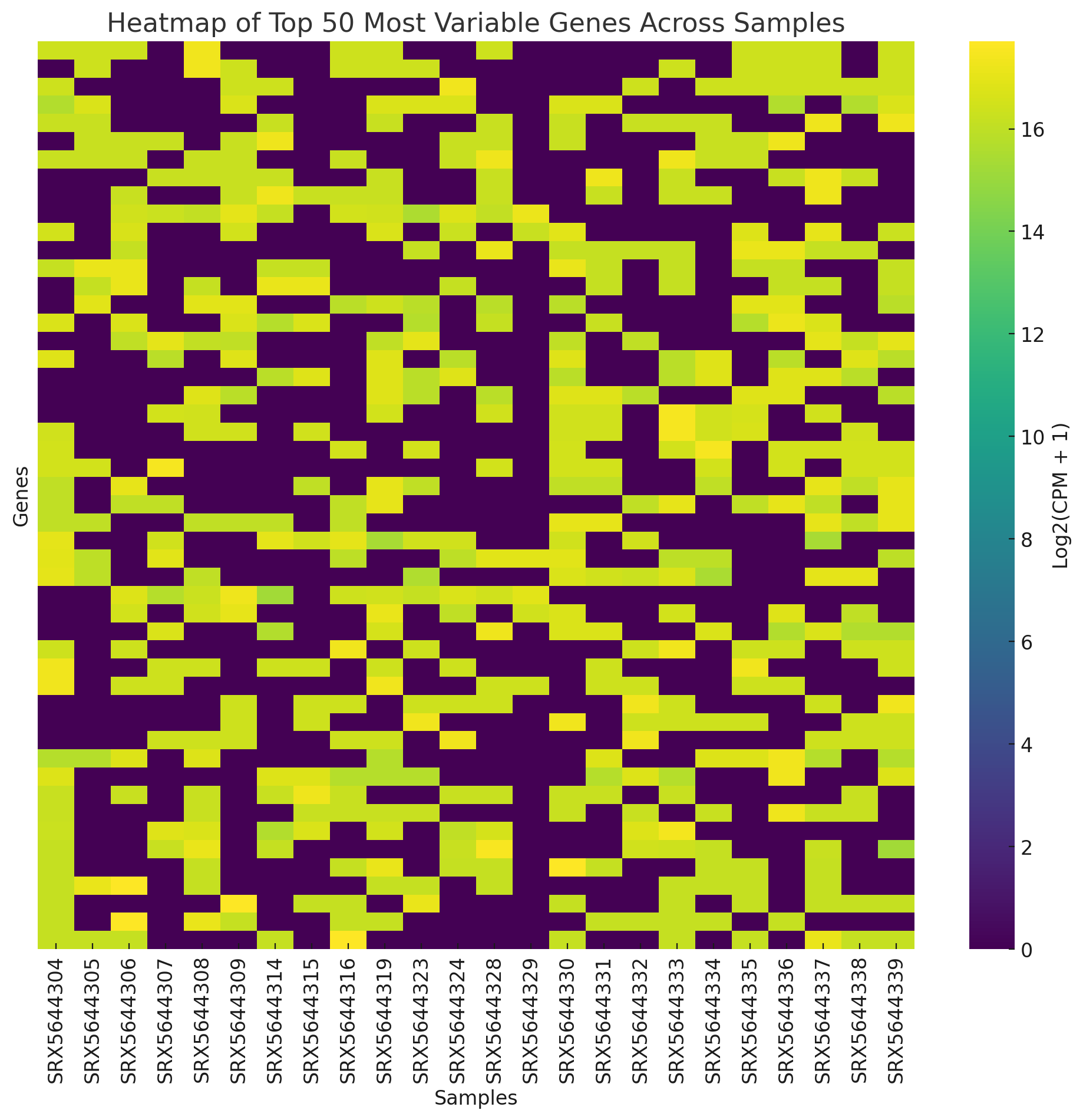

The heatmap displays the expression patterns of the top 50 most variable genes across all samples. Here’s what you can observe:

The heatmap displays the expression patterns of the top 50 most variable genes across all samples. Here’s what you can observe:

-

Color intensity represents the log-transformed CPM values.

-

Sample clustering: Examine if similar samples group together (e.g., by thermal tolerance or day).

-

Gene patterns: Look for clusters of genes with similar expression profiles. If specific patterns are evident, they may hint at genes driving differences between the groups. Let me know if you’d like further analysis or interpretation of this heatmap.

Me:

Please group the samples by (1) thermal resilience and (2) day 0 vs day 30. Please show those groupings in the axis labels

ChatGPT:

Analyzed

# Reorder samples by thermal resilience and day based on metadata

metadata_sorted = metadata.sort_values(by=['thermal.tolerance', 'day'])

sorted_samples = metadata_sorted['sample']

# Subset and reorder the heatmap data to match the sorted samples

top_genes_data_sorted = top_genes_data[sorted_samples]

# Create custom labels for the x-axis showing both thermal resilience and day

x_labels = [

f"{metadata_sorted.loc[metadata_sorted['sample'] == sample, 'thermal.tolerance'].values[0]}-Day{metadata_sorted.loc[metadata_sorted['sample'] == sample, 'day'].values[0]}"

for sample in sorted_samples

]

# Create heatmap with labeled groupings

plt.figure(figsize=(16, 10))

sns.heatmap(

top_genes_data_sorted,

cmap="viridis",

yticklabels=False,

xticklabels=x_labels,

cbar_kws={"label": "Log2(CPM + 1)"}

)

plt.title("Heatmap of Top 50 Most Variable Genes (Grouped by Thermal Resilience and Day)")

plt.xlabel("Samples (Resilience-Day)")

plt.ylabel("Genes")

plt.xticks(rotation=90, fontsize=8)

plt.show()

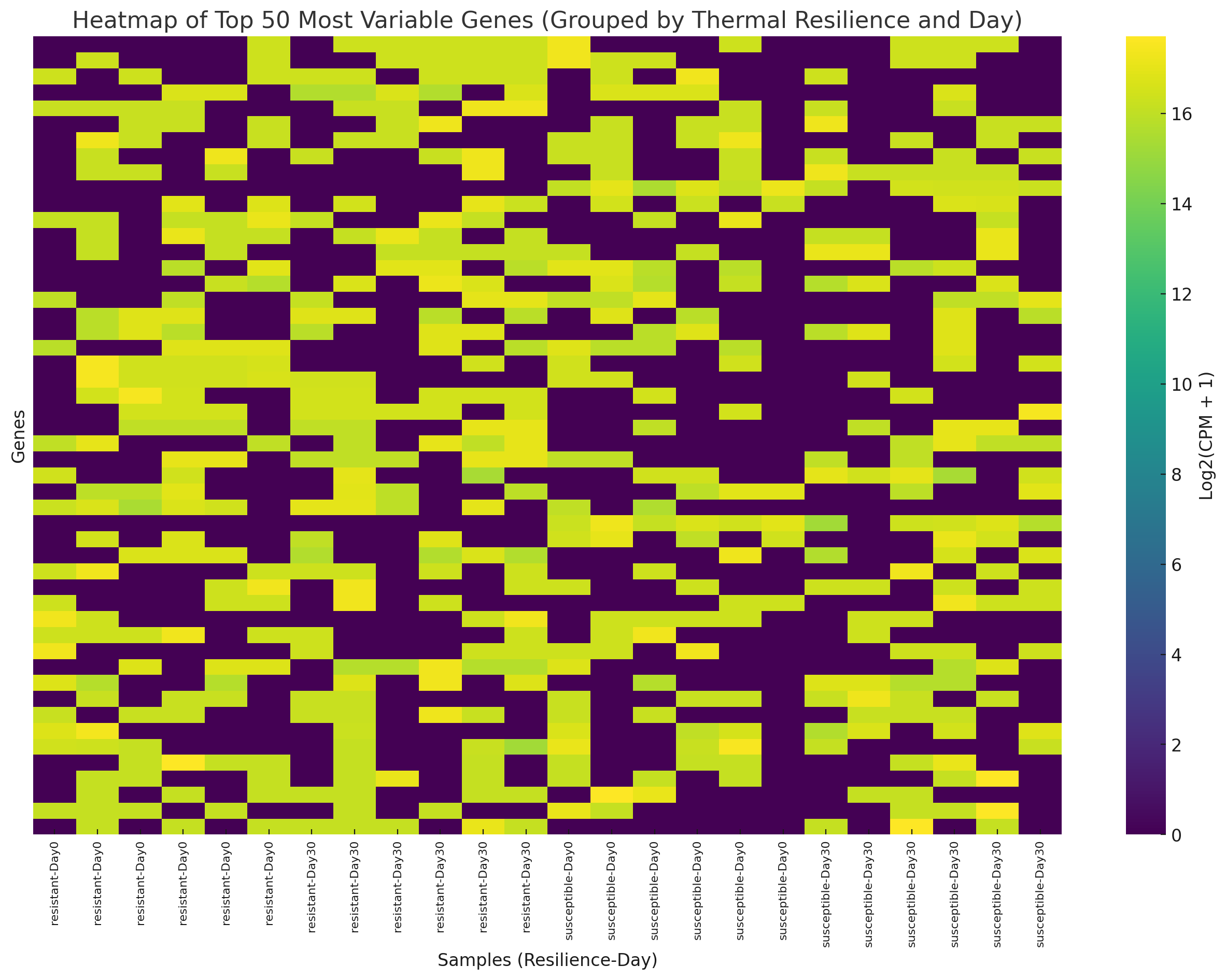

The heatmap has been updated with samples grouped by:

The heatmap has been updated with samples grouped by:

-

Thermal Resilience: Samples are grouped into resistant and susceptible categories.

-

Day: Within each thermal resilience group, samples are further grouped by Day 0 and Day 30 . This organization highlights expression patterns related to thermal resilience and temporal changes. Let me know if you’d like further analysis or move on to identifying genes correlated with resilience.

Me:

This looks like random variation by thermal resilience and day count. Is there a way to check the entropy?

ChatGPT:

To assess whether the observed variation in the heatmap is random or shows a structured relationship with thermal resilience and day, we can calculate entropy or related metrics for the expression patterns. Here’s how this can be approached:

Entropy Analysis

- Shannon Entropy :

- Compute the entropy for each gene across samples.

- Lower entropy indicates more structured (less random) patterns, whereas higher entropy suggests random variation.

- Mutual Information :

- Measure how much information gene expression provides about groupings (e.g., resilience or day).

- A high mutual information score indicates a stronger association.

- Permutation Testing :

- Shuffle group labels (resilience or day) and re-calculate entropy or mutual information.

- Compare actual values with the distribution of permuted values to assess significance.

Would you like to calculate Shannon Entropy , Mutual Information , or perform a permutation test ? Let me know which approach you prefer, and I’ll proceed!

Me:

Mutual information. [Much intervening dialog omitted] I find that each time I run this, I obtain different results because it’s a stochastic algorithm. So let’s loop through it several times, taking the average, so we can be assured that we have a stable set of the top mutual information-scoring genes.

Result

Combined Mutual Information Results for All 50 Genes (Cleaned)

| Gene | Mutual_Information |

|---|---|

| LOC109619892 | 0.7956492505695802 |

| LOC105323594 | 0.5384803863670469 |

| LOC105333998 | 0.48137837710752973 |

| LOC105330469 | 0.43134789288586894 |

| LOC105335470 | 0.39606318848351774 |

Me:

Would it be useful to produce another PCA plot that considered only the genes that show mutual information? If so, what would you suggest for an information threshold value?

ChatGPT:

Creating another PCA plot based only on genes with meaningful mutual information scores can help reveal whether these genes provide clearer clustering based on thermal resilience and day. This approach focuses on genes that are likely driving differences between the groups.

Suggested Threshold for Mutual Information

The choice of a threshold depends on the range of mutual information scores in the data:

-

High-Scoring Genes: Consider genes with mutual information scores in the top 10% (e.g., scores > 0.3 for your dataset).

-

Statistical Cutoff: If the distribution of scores shows a natural break, use it as a cutoff.

-

Flexible Exploration: Test thresholds like 0.2 or 0.3 to balance sensitivity and specificity.

Would you like to:

-

Use a specific threshold (e.g., 0.3) to select genes?

-

Visualize the distribution of mutual information scores to identify a natural threshold?

Me:

Let’s try the top 10 genes.

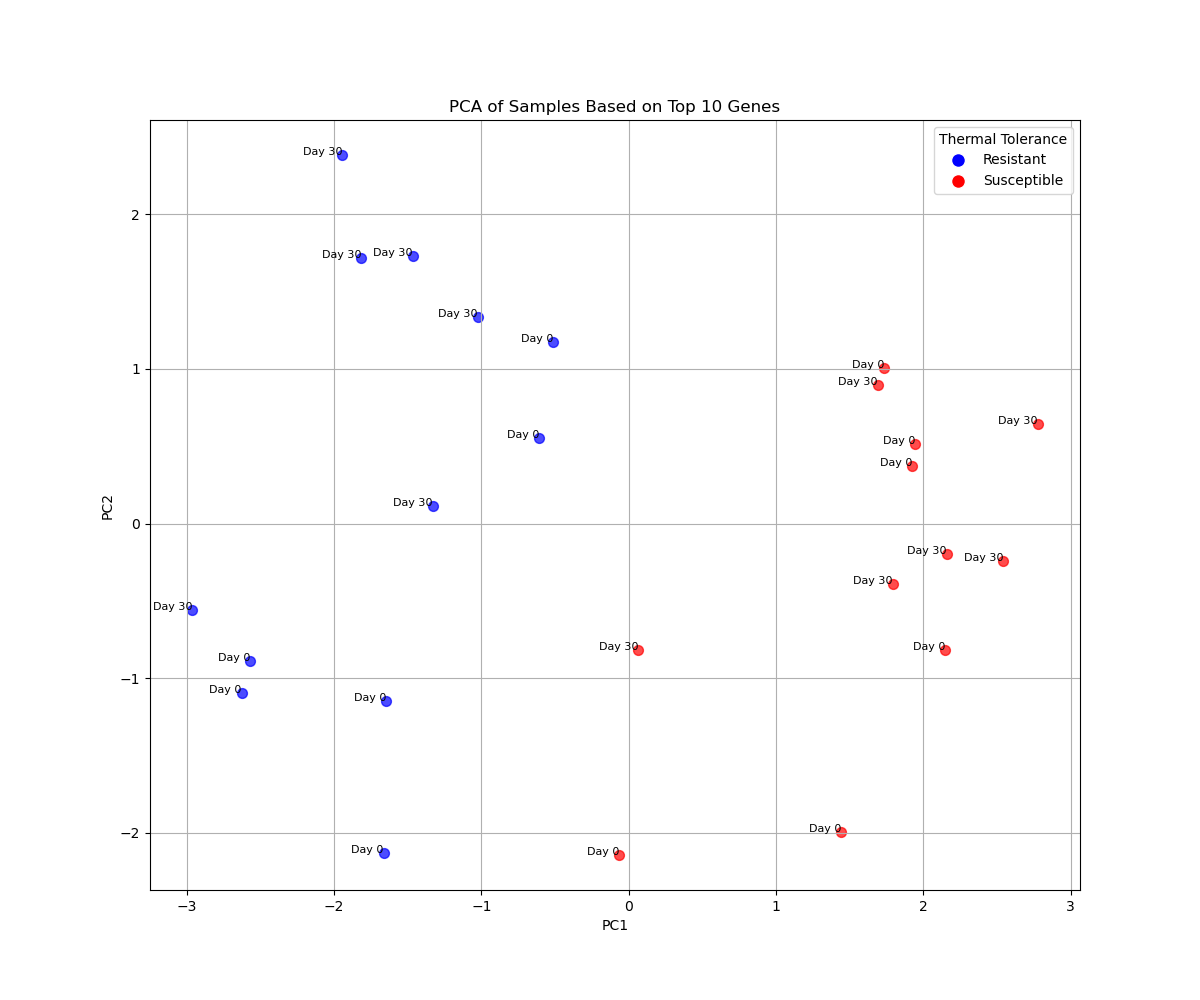

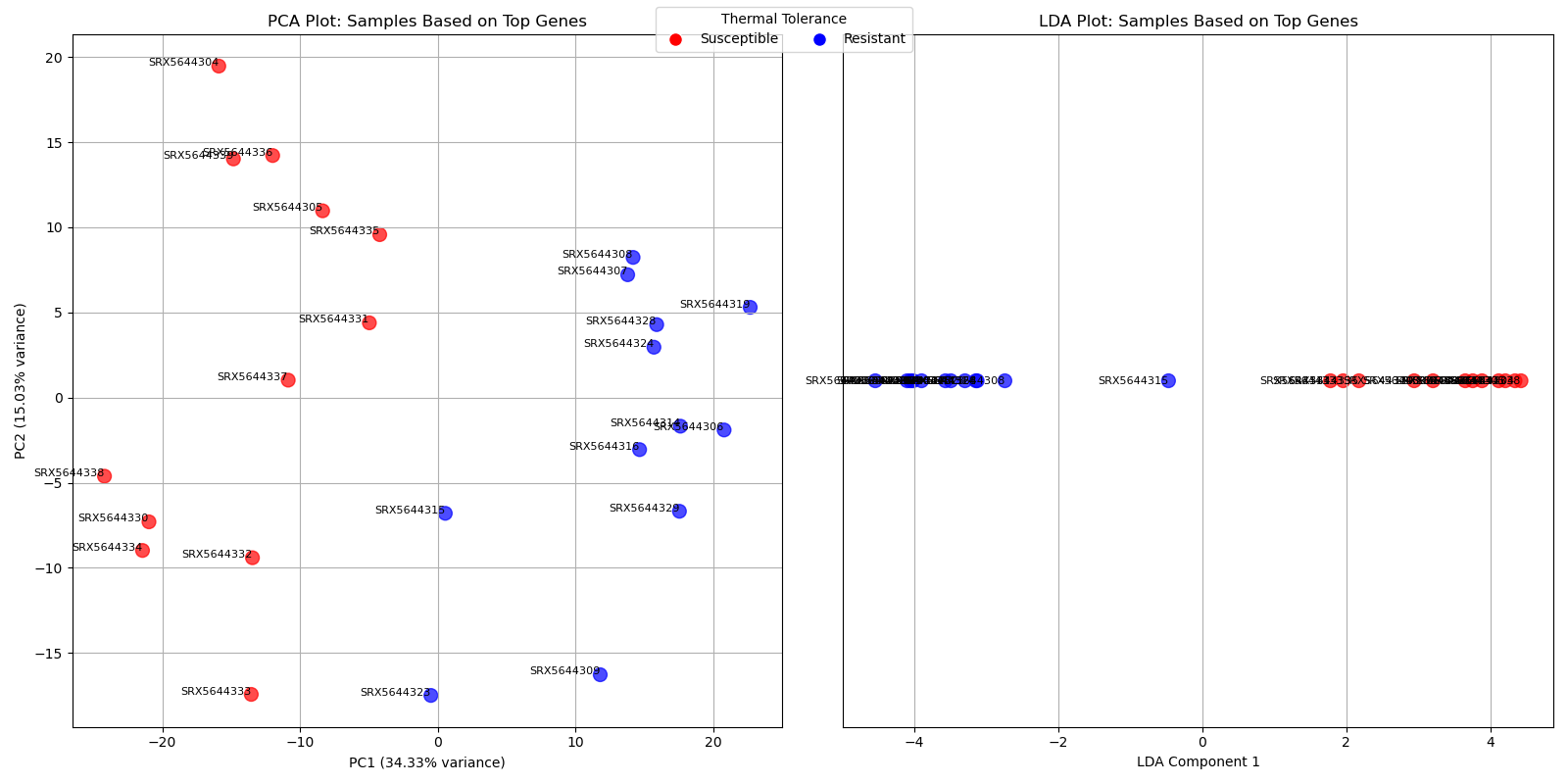

[The following PCA plot was generated after more ChatGPT dialog that resulted with the code showng farther below.]

Me:

The top 10 plot shows good visual separation between resistant and susceptible. What would be a good way to analyze that further?

ChatGPT:

The visual separation in the top 10 genes plot suggests these genes may be strongly associated with thermal resilience. To further analyze and quantify this separation, you can consider the following approaches:

1. Statistical Validation of Clustering

- Cluster Analysis:

-

Use clustering algorithms (e.g., k-means or hierarchical clustering) to group samples.

-

Evaluate whether clusters align with the thermal resilience labels.

-

- Silhouette Score or Adjusted Rand Index (ARI):

- Quantify the quality of clustering based on known labels.

2. Dimensional Separation Metrics

- Linear Discriminant Analysis (LDA):

- Assess whether a linear combination of the gene expression values can clearly separate the two groups (resistant vs. susceptible).

- t-SNE or UMAP:

- Explore alternative dimensionality reduction techniques to confirm or enhance separation.

3. Differential Gene Expression

-

Revisit the differential expression analysis using just the top 10 genes.

-

Perform a statistical comparison of gene expression between resistant and susceptible groups.

4. Gene Set Enrichment or Pathway Analysis

- Investigate the biological significance of these genes by:

-

Annotating them with gene ontology (GO) terms or pathways.

-

Performing enrichment analysis to identify overrepresented functions.

-

5. Cross-Validation

- Use machine learning (e.g., logistic regression, SVM):

-

Train a model using the expression values of the top 10 genes to predict thermal resilience.

-

Validate the model’s performance (accuracy, F1-score) on held-out samples.

-

Would you like to proceed with one of these methods? For example, I can perform cluster analysis or calculate metrics like LDA separation to validate the observed separation. Let me know your preference!

Me:

Cross-validation using machine learning is interesting, but do we have enough samples to validate the model’s performance based on held-out samples?

ChatGPT:

Good question! With only 24 samples , cross-validation and model validation can be challenging but still feasible with careful implementation. Here’s how to approach it:

1. Challenges with Small Sample Size

-

Risk of Overfitting: With few samples, models might memorize rather than generalize.

-

Limited Diversity: Fewer samples limit the model’s ability to learn complex patterns.

-

Validation Set Size: A small dataset limits the size of the test set in cross-validation, making performance metrics less robust.

2. Strategies to Address These Challenges

- Stratified Cross-Validation:

- Use stratified k-fold cross-validation (e.g., 5-fold) to ensure both classes (resistant and susceptible) are proportionally represented in each fold.

- Simplified Models:

- Use simple linear models (e.g., logistic regression) instead of complex models like neural networks.

- Feature Selection:

- Use only the top 10 genes (or fewer) to minimize noise and overfitting risk.

- Leave-One-Out Cross-Validation (LOOCV):

-

Train the model on all samples but one and test on the held-out sample, repeating for all samples.

-

Suitable for small datasets but computationally intensive.

-

3. Feasibility

-

With 24 samples and 2 classes , stratified 5-fold cross-validation provides training sets of 19 samples and test sets of 5 samples.

-

If each class has reasonable representation in both folds (resistant vs. susceptible), this setup is manageable.

Would you like to proceed with stratified cross-validation or explore leave-one-out cross-validation? I can implement a logistic regression model to test its ability to classify samples based on the top 10 genes.

Me:

First let’s do LDA.

ChatGPT:

The Linear Discriminant Analysis (LDA) model results show strong classification performance: Key Metrics

-

Accuracy: 95.8% (23 out of 24 samples correctly classified).

- Confusion Matrix:

-

Resistant: 12 correctly classified, 0 misclassified.

-

Susceptible: 11 correctly classified, 1 misclassified.

-

- Classification Report:

-

Precision: 0.92 for resistant, 1.00 for susceptible.

-

Recall: 1.00 for resistant, 0.92 for susceptible.

-

F1-Score: Both classes have an F1-score of 0.96.

-

These results indicate that the top 10 genes provide a strong basis for separating resistant and susceptible groups using LDA. Would you like to visualize the LDA result (e.g., in 2D space), proceed with cross-validation, or explore another analysis?

[Much intervening dialog]

Me:

I get a different top 10 genes for mutual information each time I run this. Is there a stochastic aspect to the algorithm?

ChatGPT:

Yes, the mutual information calculation algorithm in sklearn.feature_selection.mutual_info_classif has a stochastic aspect due to the following:Why the Results Vary

- K-Nearest Neighbors (kNN):

- The algorithm uses kNN estimators to estimate mutual information, and the kNN process can be influenced by random tie-breaking in distance calculations.

- Neighbor selection can vary slightly each time the function is run.

- Random Noise:

- To improve stability in numerical computations, small random noise might be added to the data internally during the calculation, further introducing variability.

- Random Initialization:

- Some aspects of internal computations might involve randomness depending on the specific implementation in

scikit-learn.

- Some aspects of internal computations might involve randomness depending on the specific implementation in

How to Address This To obtain consistent results:

- Set the Random Seed:

mutual_info_classifaccepts arandom_stateparameter. Set it to a fixed value for reproducibility:

My code for mutual information, PCA, and LDA plots

Below is the code that I finally created. It calculates mutual information averaged over several runs, and does a PCA plot and an LDA plot for the top ten mutual-information-scoring genes.

import pandas as pd

import numpy as np

from sklearn.feature_selection import mutual_info_classif

from sklearn.preprocessing import LabelEncoder

from sklearn.decomposition import PCA

from sklearn.discriminant_analysis import LinearDiscriminantAnalysis

from sklearn.preprocessing import StandardScaler

from sklearn.metrics import accuracy_score, confusion_matrix, classification_report

import matplotlib.pyplot as plt

import matplotlib

matplotlib.use('Agg')

# Reload the data files

gene_counts_path = '/mnt/c/Users/inter/Downloads/GMGI/ChatGPT/salmon.merged.gene_counts_2023_seqera.tsv'

metadata_path = '/mnt/c/Users/inter/Downloads/GMGI/ChatGPT/deseq_metadata2.csv'

# Read the gene counts and metadata files

gene_counts = pd.read_csv(gene_counts_path, sep='\t', index_col=0)

metadata = pd.read_csv(metadata_path)

# Filter gene counts to include only samples present in the metadata

samples_in_metadata = metadata['sample'].tolist()

filtered_gene_counts = \

gene_counts.loc[:, gene_counts.columns.intersection(samples_in_metadata)]

# Filter low-expression genes

low_expression_threshold = 10

gene_sums = filtered_gene_counts.sum(axis=1)

filtered_gene_counts_high_expression = \

filtered_gene_counts[gene_sums > low_expression_threshold]

# Normalize and log-transform data

cpm = (filtered_gene_counts_high_expression.T /

filtered_gene_counts_high_expression.sum(axis=1)).T * 1e6

log_cpm = cpm.apply(lambda x: np.log2(x + 1), axis=1)

# Calculate variance and prepare for mutual information analysis

gene_variances = log_cpm.var(axis=1)

# Select the top 50 most variable genes

top_genes = gene_variances.nlargest(50).index

# Subset the log-transformed CPM data for these top genes

top_genes_data = log_cpm.loc[top_genes]

metadata_sorted = metadata.sort_values(by=['thermal.tolerance', 'day'])

sorted_samples = metadata_sorted['sample']

# Subset and reorder the heatmap data to match the sorted samples

top_genes_data_sorted = top_genes_data[sorted_samples]

# Calculate mutual information for subsets of 10 genes

sample_metadata = metadata.set_index('sample')

# Prepare data for mutual information calculation

# Flatten the top genes data for each sample to compare with categorical labels

expression_data = top_genes_data_sorted.T.values # Shape: (samples, genes)

# Prepare data for mutual information calculation

# Flatten the top genes data for each sample to compare with categorical labels

expression_data = top_genes_data_sorted.T.values # Shape: (samples, genes)

# Encode thermal tolerance and day as labels for mutual information analysis

labels_resilience_day = metadata_sorted[['thermal.tolerance', 'day']]

labels_combined = labels_resilience_day['thermal.tolerance'] + "-Day" + \

labels_resilience_day['day'].astype(str)

label_encoder = LabelEncoder()

encoded_labels = label_encoder.fit_transform(labels_combined)

# Calculate mutual information multiple times and average, because

# it's a stochastic algorithm

mi_scores_list = []

n_repeats = 5

for _ in range(n_repeats):

mi_scores = mutual_info_classif(expression_data, encoded_labels,

discrete_features=False,

# Each repetition uses a different

# random initialization

random_state=None)

mi_scores_list.append(mi_scores)

avg_mi_scores = np.mean(mi_scores_list, axis=0)

# Create a DataFrame for averaged results

mutual_info_results = pd.DataFrame({

'Gene': top_genes_data_sorted.index,

'Mutual_Information': avg_mi_scores

}).sort_values(by='Mutual_Information', ascending=False)

print(mutual_info_results.head(10))

# Do PCA plot for the top 10, genes in mutual information score

top_genes_to_visualize = mutual_info_results.iloc[:10]['Gene']

# Subset the log-transformed CPM data for these genes

top_genes_data = log_cpm.loc[top_genes_to_visualize]

# Perform PCA for the top 10 genes

scaler = StandardScaler()

scaled_top_10_data = scaler.fit_transform(top_genes_data.T) # Transpose so genes are features

pca_top_10 = PCA(n_components=2).fit_transform(scaled_top_10_data)

# PCA plot for Top 10 Genes

plt.figure(figsize=(12, 10))

color_map = {'resistant': 'blue', 'susceptible': 'red'}

colors = [color_map[sample_metadata.loc[sample, 'thermal.tolerance']] for sample in filtered_gene_counts_high_expression.columns]

days = sample_metadata.loc[filtered_gene_counts_high_expression.columns, 'day'].tolist()

for i, sample in enumerate(filtered_gene_counts_high_expression.columns):

plt.scatter(pca_top_10[i, 0], pca_top_10[i, 1], color=colors[i], s=50, alpha=0.7)

plt.text(pca_top_10[i, 0], pca_top_10[i, 1], f"Day {days[i]}", fontsize=8, ha='right')

plt.title("PCA of Samples Based on Top 10 Genes")

plt.xlabel("PC1")

plt.ylabel("PC2")

plt.grid(True)

# Add legend for thermal tolerance

# Add legend

legend_handles = [

plt.Line2D([0], [0], marker='o', color='w', markerfacecolor='blue',

markersize=10, label='Resistant'),

plt.Line2D([0], [0], marker='o', color='w', markerfacecolor='red',

markersize=10, label='Susceptible')

]

plt.legend(handles=legend_handles, title="Thermal Tolerance")

plt.savefig("ChatGPT/PCA_top_10_mutual_info_genes.png")

# Encode thermal tolerance labels

labels = metadata.set_index('sample').loc[log_cpm.columns, 'thermal.tolerance']

label_encoder = LabelEncoder()

encoded_labels = label_encoder.fit_transform(labels) # 0 = susceptible, 1 = resistant

colors = ['red' if label == 0 else 'blue' for label in encoded_labels] # Red for susceptible, blue for resistant

# Perform PCA on the selected genes

pca = PCA(n_components=2)

pca_result = pca.fit_transform(top_genes_data.T.values)

# Perform LDA on the selected genes

lda = LinearDiscriminantAnalysis(n_components=1)

lda_result = lda.fit_transform(top_genes_data.T.values, encoded_labels)

# Evaluate LDA performance

# Predict class labels using LDA

lda_predictions = lda.predict(top_genes_data.T.values)

# Evaluate LDA performance

lda_accuracy = accuracy_score(encoded_labels, lda_predictions)

lda_conf_matrix = confusion_matrix(encoded_labels, lda_predictions)

lda_report = classification_report(encoded_labels, lda_predictions,

target_names=label_encoder.classes_)

print(lda_accuracy)

print(lda_conf_matrix)

print(lda_report)

# Create side-by-side comparison plots

fig, axs = plt.subplots(1, 2, figsize=(16, 8))

# PCA Plot

axs[0].scatter(pca_result[:, 0], pca_result[:, 1], c=colors, alpha=0.7, s=100)

for i, sample in enumerate(log_cpm.columns):

axs[0].text(pca_result[i, 0], pca_result[i, 1], sample, fontsize=8, ha='right')

axs[0].set_title("PCA Plot: Samples Based on Top Genes")

axs[0].set_xlabel(f"PC1 ({pca.explained_variance_ratio_[0]*100:.2f}% variance)")

axs[0].set_ylabel(f"PC2 ({pca.explained_variance_ratio_[1]*100:.2f}% variance)")

axs[0].grid(True)

# LDA Plot

axs[1].scatter(lda_result, [0] * len(lda_result), c=colors, alpha=0.7, s=100)

for i, sample in enumerate(log_cpm.columns):

axs[1].text(lda_result[i], 0, sample, fontsize=8, ha='right', va='center')

axs[1].set_title("LDA Plot: Samples Based on Top Genes")

axs[1].set_xlabel("LDA Component 1")

axs[1].set_yticks([])

axs[1].grid(True)

# Add legends

legend_handles = [

plt.Line2D([0], [0], marker='o', color='w', markerfacecolor='red', markersize=10, label='Susceptible'),

plt.Line2D([0], [0], marker='o', color='w', markerfacecolor='blue', markersize=10, label='Resistant')

]

fig.legend(handles=legend_handles, title="Thermal Tolerance", loc="upper center", ncol=2)

plt.tight_layout()

plt.savefig("ChatGPT/PCA_LDA_top_10_mutual_info_genes.png")

Overfitting?

Me:

Is there a chance I’m doing any kind of overfitting by selecting the genes with maximum mutual information?

ChatGPT:

Yes, selecting genes based solely on their mutual information scores can lead to overfitting if not carefully managed. Here’s why and how to address it:

Why Overfitting Can Occur

- Gene Selection Bias :

- Selecting genes with the highest mutual information inherently optimizes for the current data.

- These genes may not generalize well to new or unseen samples.

- Small Sample Size :

- With a small number of samples (e.g., 24 in your case), mutual information scores can be sensitive to random noise or sample-specific patterns.

- Multiple Hypothesis Testing :

- Considering many genes inflates the chance of identifying genes that appear significant due to random variation.

Mitigation Strategies

- Cross-Validation for Gene Selection :

- Use nested cross-validation :

-

Outer loop: Evaluate model performance.

-

Inner loop: Select the best genes within each training fold to avoid data leakage.

- Ensures that gene selection does not directly influence the test set performance.

- Split Data for Testing :

- Reserve a subset of samples as a true hold-out test set .

- Select genes and train models only on the remaining data.

- Permutation Testing :

- Randomly shuffle the labels and recalculate mutual information scores.

- Compare actual scores to this null distribution to assess significance.

- Biological Validation :

- Cross-reference selected genes with biological literature or pathway databases to confirm relevance.

- Prioritize genes that have known roles in related processes.

- Alternative Metrics :

- Complement mutual information with other selection criteria, such as variance, fold change, or statistical tests (e.g., ANOVA or t-tests).

A Suggested Workflow To reduce overfitting risk, you can:

-

Perform nested cross-validation or leave-one-out cross-validation.

-

Use mutual information as a preliminary step, followed by validation on a hold-out set.

-

Combine gene selection with biological interpretation to refine the final list.

Would you like a detailed example of nested cross-validation for gene selection?

Me:

let’s take a step back. how many samples are we working with?

ChatGPT:

You are working with 24 samples , as per the metadata file and the filtered gene count matrix. These samples include:

-

Thermal Tolerance Groups: Likely divided into

resistantandsusceptible. -

Time Points: Likely taken at

Day 0andDay 30.

Would you like to confirm the exact breakdown of the samples across these categories? If so, I can guide you on how to summarize this from your metadata file.

Me:

with this number of samples, and roughly 32000 genes, what will we need to determine a statistically significant result showing for genetic biomarkers for thermal resistance?

ChatGPT:

With 24 samples and around 32,000 genes , identifying statistically significant genetic biomarkers for thermal resistance is challenging due to the high-dimensional nature of the data. Here’s a detailed breakdown of what’s needed to achieve robust results:

Challenges

- Multiple Testing Problem :

- Testing 32,000 genes increases the risk of false positives.

- Adjusting for multiple comparisons (e.g., Bonferroni, Benjamini-Hochberg) reduces the chance of Type I errors but lowers statistical power.

- Small Sample Size :

- With only 24 samples, you have limited statistical power to detect small but meaningful effects.

- Small sample sizes are also more susceptible to noise and overfitting.

Statistical Power and Sample Size Power Analysis : To detect significant differences:

- Define:

-

Effect Size (\(d\)): The magnitude of the difference in gene expression between groups (e.g., resistant vs. susceptible).

-

Significance Level (\(\alpha\)): Typically set at 0.05.

-

Power ($1-\beta$) : The probability of detecting a true effect, often set to 0.8 or 0.9.

-

- Tools like G*Power or Python libraries (e.g.,

statsmodels) can estimate required sample sizes given \(d\) and \(\alpha\). Example Calculations : - Detecting a large effect size (\(d > 1.2\)):

- Requires fewer samples (e.g., ~8 per group for \(1-\beta = 0.8\)).

- Detecting a small effect size (\(d < 0.5\)):

- Requires significantly more samples (~50–100 per group).

Strategies to Improve Statistical Significance

- Reduce Dimensionality :

- Use feature selection (e.g., variance filtering, mutual information, or differential expression analysis) to focus on the most relevant genes.

- Limit testing to biologically plausible candidates (e.g., stress response genes).

- Batch Effects and Noise Reduction :

- Control for potential batch effects in RNA-seq data (e.g., sequencing batch, library preparation).

- Normalize data thoroughly (e.g., with

DESeq2oredgeR).

- Combining Data Across Studies :

- Use publicly available datasets or replicate experiments to increase sample size.

- Resampling Methods :

- Use bootstrap resampling or permutation tests to estimate the stability of your findings.

- Cross-validate findings to confirm generalizability.

Criteria for Statistical Significance

- Adjusted P-Values :

- Use Benjamini-Hochberg FDR to identify genes with \(q\)-values < 0.05 as significant.

- Avoid overly conservative corrections like Bonferroni unless warranted.

- Biological Effect Size :

-

Focus on genes with large log fold changes ($$ \text{log2FC} > 1$$) alongside statistical significance.

-

- Validation :

- Validate findings in an independent dataset or experimental system.

Next Steps Would you like to:

-

Perform a power analysis for a specific effect size?

-

Filter genes to reduce the dimensionality of the data?

-

Proceed with differential expression analysis or biomarker selection?

Me:

let’s try a power analysis

ChatGPT:

Analyzed

from statsmodels.stats.power import TTestIndPower

# Parameters for power analysis

effect_size = 0.8 # Medium effect size (Cohen's d)

alpha = 0.05 # Significance level

power = 0.8 # Desired power (1 - beta)

# Perform power analysis to determine required sample size per group

analysis = TTestIndPower()

required_sample_size = analysis.solve_power(effect_size=effect_size, alpha=alpha, power=power, alternative='two-sided')

required_sample_size_per_group = round(required_sample_size)

required_sample_size_total = required_sample_size_per_group * 2 # Total for both groups

required_sample_size_per_group, required_sample_size_total

For a medium effect size (Cohen’s d = 0.8) , alpha = 0.05 , and power = 0.8 :

-

Required sample size per group: 26

-

Total required sample size (both groups): 52

This indicates that your current sample size of 12 per group (24 total) is underpowered to reliably detect medium effect sizes. To proceed effectively, you can:

-

Increase the sample size if feasible.

-

Focus on larger effect sizes (e.g., Cohen’s d > 1.0).

-

Apply feature selection or prioritize specific candidate genes to reduce the scope of analysis. Would you like to analyze for larger effect sizes or adjust the parameters?